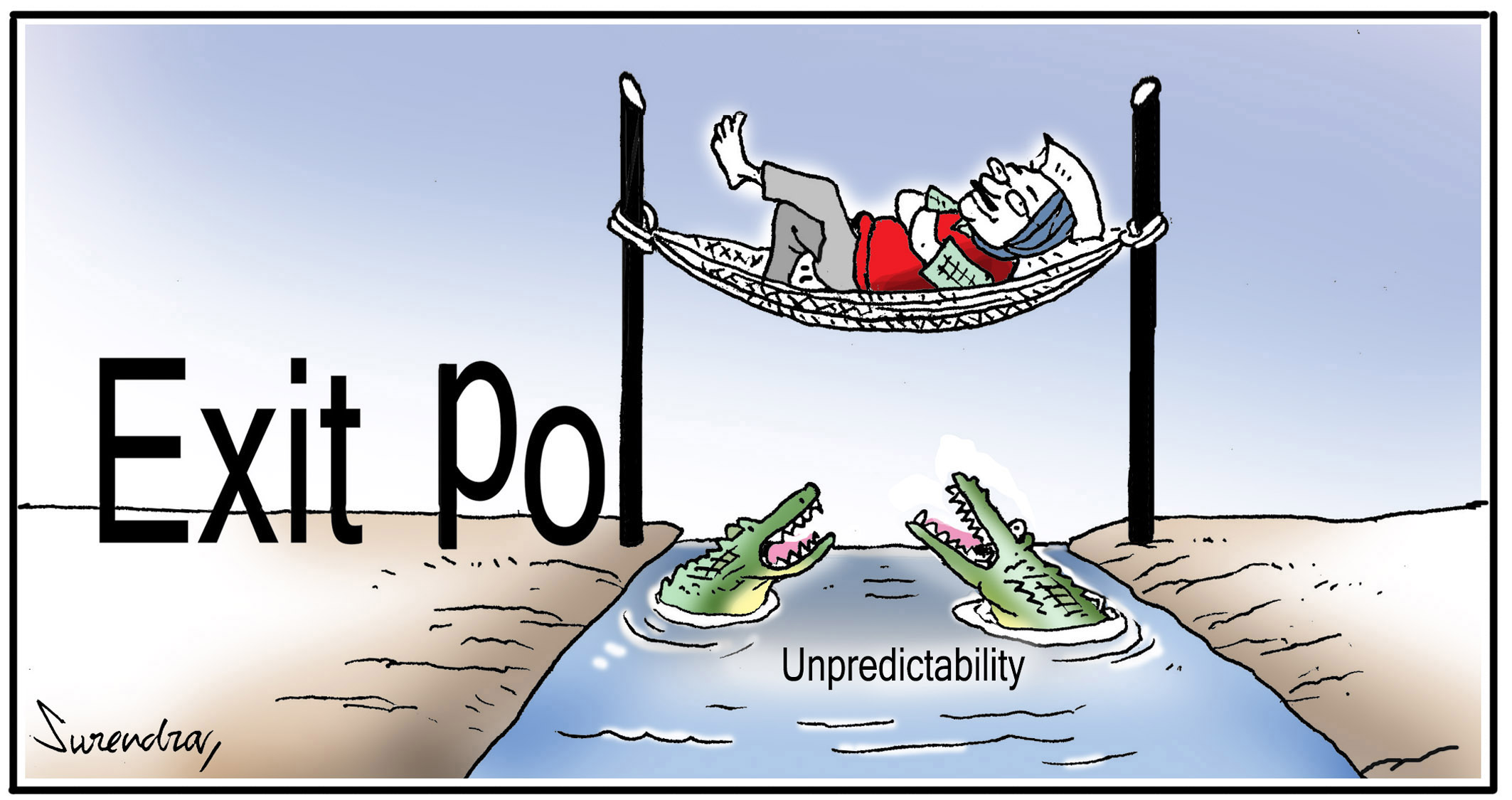

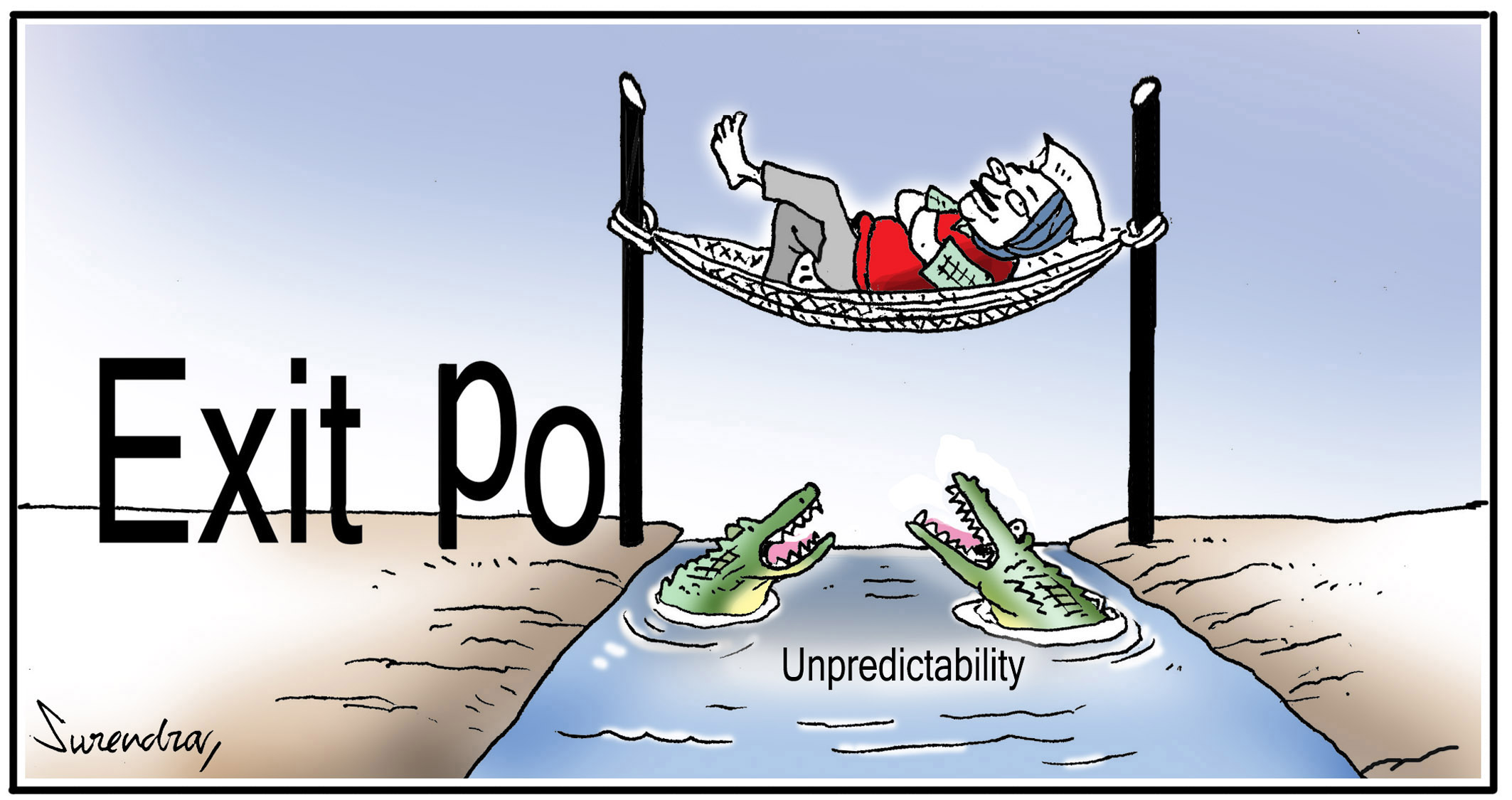

After the Bihar Elections' prediction debacle, pollsters have decided to form a self-regulatory body. Will this help stop their failure streak?

This is unlikely to address the core problems in polling. But it’s a useful start, as it’s the first such endeavor in India.

Firstly, the body consists of just 6 pollsters from New Delhi. The rest of the country does not have access to that body’s data or its output, as a matter of routine. But there are good reasons why pollsters feel cagey about their raw data and that is one of the fundamental problems with polling as well.

Close

Why do Opinion/Exit Polls in India fail so often?

The problems with opinion and exit polling in India aren’t unique.

But India is the world’s largest Democracy. Problems that exist everywhere are further complicated by size, an FPTP system in a multi party electoral fray and a vastly unequal society that has multiple cleavages like language, caste and religion to name a few. The basic reasons for which a survey, which is what a poll is except it asks questions on voting, can go wrong are finite. There can be either be sampling bias or response bias or a combination of the two.

But India is the world’s largest Democracy. Problems that exist everywhere are further complicated by size, an FPTP system in a multi party electoral fray and a vastly unequal society that has multiple cleavages like language, caste and religion to name a few. The basic reasons for which a survey, which is what a poll is except it asks questions on voting, can go wrong are finite. There can be either be sampling bias or response bias or a combination of the two.

Close

What is sampling error or sampling bias? How does that affect polls? Can they be overcome?

The basic idea of a poll or a survey is to take a sufficiently large random sample so that it tends to be representative of the actual voting population.

Sampling errors happen when the chosen sample is not truly random or representative. This can happen for a variety of reasons.

A simple random sample in our case will be one in which a pollster takes every nth voter from a voting list. That however is often difficult. There can be difficulties in reaching every nth person in a country like India. Not everyone has a phone or an easily reachable address. And not every nth person may be a likely voter in case of an opinion poll, given voting isn’t compulsory. And not every nth person may want to give out his or her political choice or opinion.

The other complicating factor towards sampling errors is the absence of demographic data. Census data does not, or at least did not until the last Census, give out caste level population. It has only recently released religion-based data. So, while social fault lines queer the sampling process this is in turn gets impossible to adjust or account for since we do not know the actual demographic split between the various fault lines of society.

Close

What is response rate and how does that affect polling accuracy?

Response rate is the ratio between the number of people who answer or respond to poll and the total number of people who’ve been contacted for the poll.

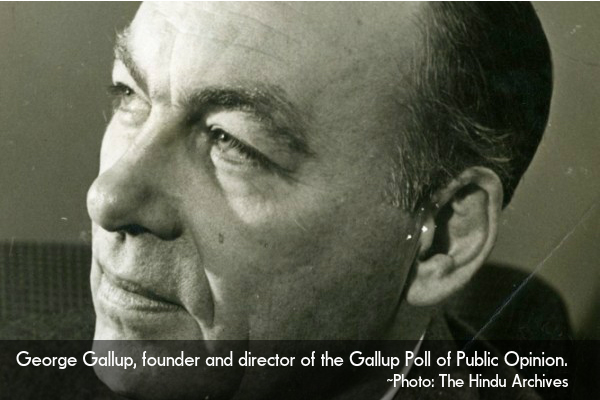

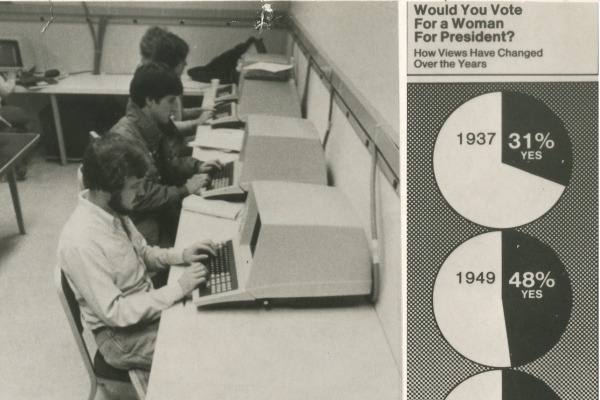

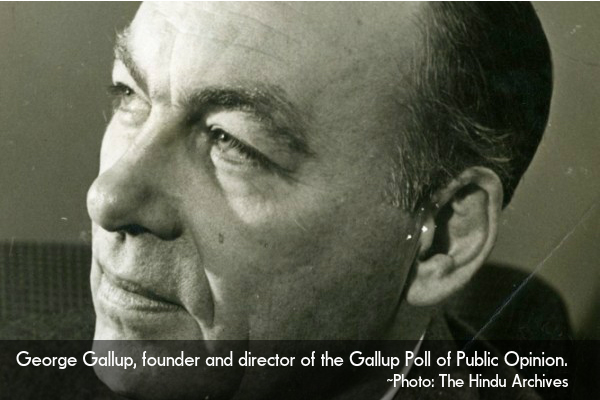

In the USA, where this data is available, the response rate has been in single digits for a long time; if every nth voter is called, less than 10% of those called told the pollster their opinion/whom they voted for. In the 1940s this created a scandal and George Gallup, the pioneer of opinion polling, had to testify in a Congressional hearing about response rates of his polling company. In 1940s, America had only 10% of the population as black and most of them were disenfranchised anyway owing to Jim Crow laws. But even for that relatively homogeneous white voting population, the response rate had fallen to single digits and caused errors.

In India, the problem is complicated manifold. Firstly, telephone surveys don’t work at all. There’s a selection bias that’s too huge to account for if only people with phones were polled. And, there are cultural and linguistic reasons as well: it’s very difficult for someone who speaks in a certain urban way to get a Dalit in a rural area to answer questions on political opinion even if one assume the said Dalit possesses a phone and speaks the same language. This holds true to some degree even if the pollsters go physically to where the voters are.

So the problem then becomes: those who are unafraid and even eager to express their political opinion are the only ones who get counted. This leaves out the silent majority who often swing elections; a sure shot way to be wrong. Add to this the unavailability of demographic splits, which may be useful in arriving at adjustment factors, for oppressed communities if there’s a low response rate. The pollsters have a truly unenviable job.

Close

What is response rate and how does that affect polling accuracy?

Response rate is the ratio between the number of people who answer or respond to poll and the total number of people who’ve been contacted for the poll.

In the USA, where this data is available, the response rate has been in single digits for a long time; if every nth voter is called, less than 10% of those called told the pollster their opinion/whom they voted for. In the 1940s this created a scandal and George Gallup, the pioneer of opinion polling, had to testify in a Congressional hearing about response rates of his polling company. In 1940s, America had only 10% of the population as black and most of them were disenfranchised anyway owing to Jim Crow laws. But even for that relatively homogeneous white voting population, the response rate had fallen to single digits and caused errors.

In the USA, where this data is available, the response rate has been in single digits for a long time; if every nth voter is called, less than 10% of those called told the pollster their opinion/whom they voted for. In the 1940s this created a scandal and George Gallup, the pioneer of opinion polling, had to testify in a Congressional hearing about response rates of his polling company. In 1940s, America had only 10% of the population as black and most of them were disenfranchised anyway owing to Jim Crow laws. But even for that relatively homogeneous white voting population, the response rate had fallen to single digits and caused errors.

In India, the problem is complicated manifold. Firstly, telephone surveys don’t work at all. There’s a selection bias that’s too huge to account for if only people with phones were polled. And, there are cultural and linguistic reasons as well: it’s very difficult for someone who speaks in a certain urban way to get a Dalit in a rural area to answer questions on political opinion even if one assume the said Dalit possesses a phone and speaks the same language. This holds true to some degree even if the pollsters go physically to where the voters are.

In India, the problem is complicated manifold. Firstly, telephone surveys don’t work at all. There’s a selection bias that’s too huge to account for if only people with phones were polled. And, there are cultural and linguistic reasons as well: it’s very difficult for someone who speaks in a certain urban way to get a Dalit in a rural area to answer questions on political opinion even if one assume the said Dalit possesses a phone and speaks the same language. This holds true to some degree even if the pollsters go physically to where the voters are.

So the problem then becomes: those who are unafraid and even eager to express their political opinion are the only ones who get counted. This leaves out the silent majority who often swing elections; a sure shot way to be wrong. Add to this the unavailability of demographic splits, which may be useful in arriving at adjustment factors, for oppressed communities if there’s a low response rate. The pollsters have a truly unenviable job.

Close

Are there other kinds of biases or errors?

Response bias is the other kind of problem that pollsters face.

That is, when the respondents do answer, they can lie. Humans lie for a variety of reasons and in ways that aren’t random. Studies have shown that socially disadvantaged groups in semi-feudal societies tell their interviewers what they think the interviewer wants to hear. This in an electoral democracy with caste politics can play a significant role.

Many surveys, for instance, use college students for the fieldwork. As it happens, colleges tend to be over-represented with male and with upper caste students. The outcome of this is easy to guess: an upper caste, male student is likely end up asking many lower caste voters whom they will be voting for. The probability that the lower caste voter either doesn’t answer or lies outright appears non-negligible.

Close

So response biases/sampling biases and low response rates are a problem. Any examples to support this?

Yes, here's a big one that shows what we're talking about.

Historically, vote share of Dalit parties have been under-estimated by a few percentage points in most exit and opinion polls. Likewise, the vote share for parties that get support from sections of middle and upper castes/classes, such as the BJP, have been over-estimated. This isn’t by any means definitive; however that there is a trend and it needs further study are true.

Close

These are structural problems, right? So how exactly do we solve them?

We can’t get a random sample, we can’t adjust for selection bias because we can’t get the social break up of the voting population right, we can’t account for response rates or the resultant sampling bias and we don’t account for response biases. All of this is made more difficult by the fact that caste level data is unavailable.

The only way in which any of the above problems can be addressed is if the data is made public and there are more and more such polls. Every data point, even when and especially when they are wrong, help us understand these problems a bit better. Sharing data with each other, as the current self-regulating body envisages, is a start. But more importantly, researchers and academics should have access to this data.

Every problem listed above and the many we don’t even know of, can all be understood fully only when the data is made available and independent researchers look at it. It’s possible that clever students and researchers come up with adjustment factors for each of the above problems that may help reduce error. Letting the scientific method work is the best bet humans have had for any and all problems.

Close

Ok, we get the in-built problems. What of pollsters falsifying data intentionally? Can that even be found?

That is a possibility; it’s less likely but not implausible.

When faced with a task as daunting as above, the temptation to fudge the numbers a bit or falsify them entirely is not small. Added reasons such as political bias and the ability of polls to influence people make it even more tempting. So such a regulatory body may be useful on that count. There are certain kinds of fudging and falsification that have been found out in the past. But human ingenuity is ever evolving and new methods of fraud will always emerge.

Close

What are those methods of fraud that have been found? Where and how were they found? Can they be applied to future data sets as well?

Fudging and falsifying data can happen in many ways.

The simplest form of it is manual fudging. This means the pollster simply makes data up wherever it’s missing or makes it up entirely from scratch, but in ways that are manual and not algorithmic. The biggest detecting mechanism against such types of fraud is the fact that human beings are bad at being truly random.

A famous example of this type of fraud was a scandal involving Research 2000/ Daily Kos in polling during American Presidential elections last cycle. The data was found to have even numbered percentage responses for both genders; that is both male and female response rates for a question, such as say likability of Obama, ended in an even number. The probability of that in one question in one poll, which can happen, is 0.5*0.5 = 0.25. But they had even numbered response percentages for both genders for 24 successive polls. That results in an impossibly low probability; so low, it’s less than one in all the atoms in the Universe. Most reasonable people will conclude that’s fudged. But that’s just one way to fudge it. No one is going to do this again given people will now look for this.

Close

What are the algorithmic ways of falsifying data? Is there a case to be made that politically biased polls belong to this kind? Can these be found out? Are there examples of this kind?

Generating a completely false data set, algorithmically, is possible.

A famous example of this kind of data falsification was in the study titled ‘When contact changes minds: An experiment on transmission of support for gay equality’. In it, authors claimed people who’re opposed to gay marriages have a greater likelihood of changing their minds if gay people talk to them about the issue. It’s an intuitively appealing idea and the study consequently gained a lot of traction. However, when others tried to replicate the study, they weren’t able to. When they contacted the polling company that the original authors had cited, the company said it did not do such a survey.

So, naturally, the data was scrutinized at some length by the new researchers. They applied a relatively well-established test called the Kolmogorov-Smirnov test to detect if the data had been falsified. This test is something Physicists use to do far more important things such as detect the presence of new particles. What the test does is: it takes a new data set and finds if it’s merely an existing data set to which some random noise has been added to.

One can imagine a politically motivated pollster who wants to make one party win using this technique. A simple method to generate a new data set for an exit or opinion poll will be to add noise to an actual past result in which the party in question won. The Kolmogorov-Smirnov test can detect this. Even for those of us who did not take courses in Statistical Physics, the test is easy to administer these days given it takes just one line of code in mathematical programming languages such as R or Octave.

Close

Surely there are plenty of other ways to falsify data. Does a self-regulating body sound credible to flag these kinds of errors?

The only way to find out is to make the data available to anyone who wants to analyze.

New methods of falsification and new tests to detect them will be discovered. A self-regulating body, one fears, will hardly be pointing fingers at itself. This is why opening up the data is important since the scientific method will address these aspects much better.

Close

Pollsters should really make their data public. Isn’t that the only way the good ones can distinguish themselves from the bad?

Wel, they can but there's always a catch.

The problem pollsters have in revealing the raw data is two fold: their secret sauce gets revealed and the secret sauce in this business often tends to be a series of assumptions that may be wrong. That is a non-trivial problem to solve.

Close

But India is the world’s largest Democracy. Problems that exist everywhere are further complicated by size, an FPTP system in a multi party electoral fray and a vastly unequal society that has multiple cleavages like language, caste and religion to name a few. The basic reasons for which a survey, which is what a poll is except it asks questions on voting, can go wrong are finite. There can be either be sampling bias or response bias or a combination of the two.

But India is the world’s largest Democracy. Problems that exist everywhere are further complicated by size, an FPTP system in a multi party electoral fray and a vastly unequal society that has multiple cleavages like language, caste and religion to name a few. The basic reasons for which a survey, which is what a poll is except it asks questions on voting, can go wrong are finite. There can be either be sampling bias or response bias or a combination of the two.

In the USA, where this data is available, the response rate has been in single digits for a long time; if every nth voter is called, less than 10% of those called told the pollster their opinion/whom they voted for. In the 1940s this created a scandal and George Gallup, the pioneer of opinion polling, had to testify in a Congressional hearing about response rates of his polling company. In 1940s, America had only 10% of the population as black and most of them were disenfranchised anyway owing to Jim Crow laws. But even for that relatively homogeneous white voting population, the response rate had fallen to single digits and caused errors.

In the USA, where this data is available, the response rate has been in single digits for a long time; if every nth voter is called, less than 10% of those called told the pollster their opinion/whom they voted for. In the 1940s this created a scandal and George Gallup, the pioneer of opinion polling, had to testify in a Congressional hearing about response rates of his polling company. In 1940s, America had only 10% of the population as black and most of them were disenfranchised anyway owing to Jim Crow laws. But even for that relatively homogeneous white voting population, the response rate had fallen to single digits and caused errors.

In India, the problem is complicated manifold. Firstly, telephone surveys don’t work at all. There’s a selection bias that’s too huge to account for if only people with phones were polled. And, there are cultural and linguistic reasons as well: it’s very difficult for someone who speaks in a certain urban way to get a Dalit in a rural area to answer questions on political opinion even if one assume the said Dalit possesses a phone and speaks the same language. This holds true to some degree even if the pollsters go physically to where the voters are.

In India, the problem is complicated manifold. Firstly, telephone surveys don’t work at all. There’s a selection bias that’s too huge to account for if only people with phones were polled. And, there are cultural and linguistic reasons as well: it’s very difficult for someone who speaks in a certain urban way to get a Dalit in a rural area to answer questions on political opinion even if one assume the said Dalit possesses a phone and speaks the same language. This holds true to some degree even if the pollsters go physically to where the voters are.